The messaging app Telegram has said it will hand over users' IP addresses and phone numbers to authorities who have search warrants or other valid legal requests.

The change to its terms of service and privacy policy "should discourage criminals", CEO Pavel Durov said in a Telegram post on Monday.

“While 99.999% of Telegram users have nothing to do with crime, the 0.001% involved in illicit activities create a bad image for the entire platform, putting the interests of our almost billion users at risk,” he continued.

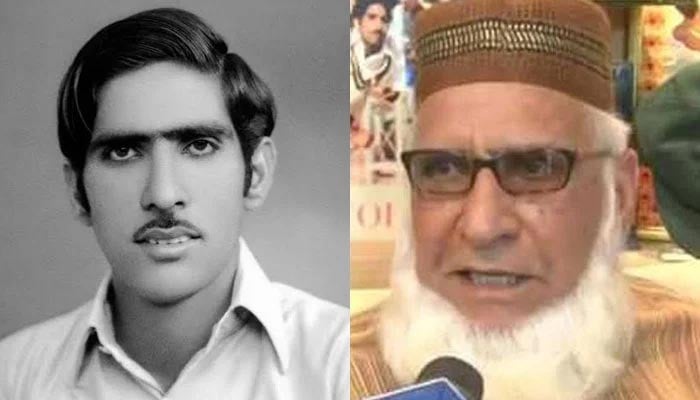

The announcement marks a significant reversal for Mr Durov, the platform’s Russian-born co-founder who was detained by French authorities last month at an airport just north of Paris.

Days later, prosecutors there charged him with enabling criminal activity on the platform. Allegations against him include complicity in spreading child abuse images and trafficking of drugs. He was also charged with failing to comply with law enforcement.

Mr Durov, who has denied the charges, lashed out at authorities shortly after his arrest, saying that holding him responsible for crimes committed by third parties on the platform was both "surprising" and "misguided."

Critics say Telegram has become a hotbed of misinformation, child pornography, and terror-related content partly because of a feature that allows groups to have up to 200,000 members.

Meta-owned WhatsApp, by contrast, limits the size of groups to 1,000.

Telegram was scrutinised last month for hosting far-right channels that contributed to violence in English cities.

Earlier this week, Ukraine banned the app on state-issued devices in a bid to minimise threats posed by Russia.

The arrest of the 39-year old chief executive has sparked debate about the future of free-speech protections on the internet.

After Mr Durov's detention, many people began to question whether Telegram was actually a safe place for political dissidents, according to John Scott-Railton, senior researcher at the University of Toronto's Citizen Lab.

He says this latest policy change is already being greeted with even more alarm in many communities.

"Telegram’s marketing as a platform that would resist government demands attracted people that wanted to feel safe sharing their political views in places like Russia, Belarus, and the Middle East," Mr Scott-Railton said.

"Many are now scrutinizing Telegram's announcement with a basic question in mind: does this mean the platform will start cooperating with authorities in repressive regimes?"

Telegram has not given much clarity on how the company will handle the demands from leaders of such regimes in the future, he added.

Cybersecurity experts say that while Telegram has removed some groups in the past, it has a far weaker system of moderating extremist and illegal content than competing social media companies and messenger apps.

Before the recent policy expansion, Telegram would only supply information on terror suspects, according to 404 Media.

On Monday Mr Durov said the app was now using “a dedicated team of moderators" who were leveraging artificial intelligence to conceal problematic content in search results.

But making that type of material harder to find likely won’t be enough to fulfill requirements under French or European law, according to Daphne Keller at Stanford University’s Center for Internet and Society.

“Anything that Telegram employees look at and can recognize with reasonable certainty is illegal, they should be removing entirely,” Ms Keller said.

In some countries, they also need to notify authorities about particular kinds of seriously illegal content such as child sexual abuse material, she added.

Ms Keller questioned whether the company's changes would be enough to satisfy authorities seeking information about targets of investigations, including who they are communicating with and the content of those messages.

"It sounds like a commitment that is likely less than what law enforcement wants," Ms Keller said.